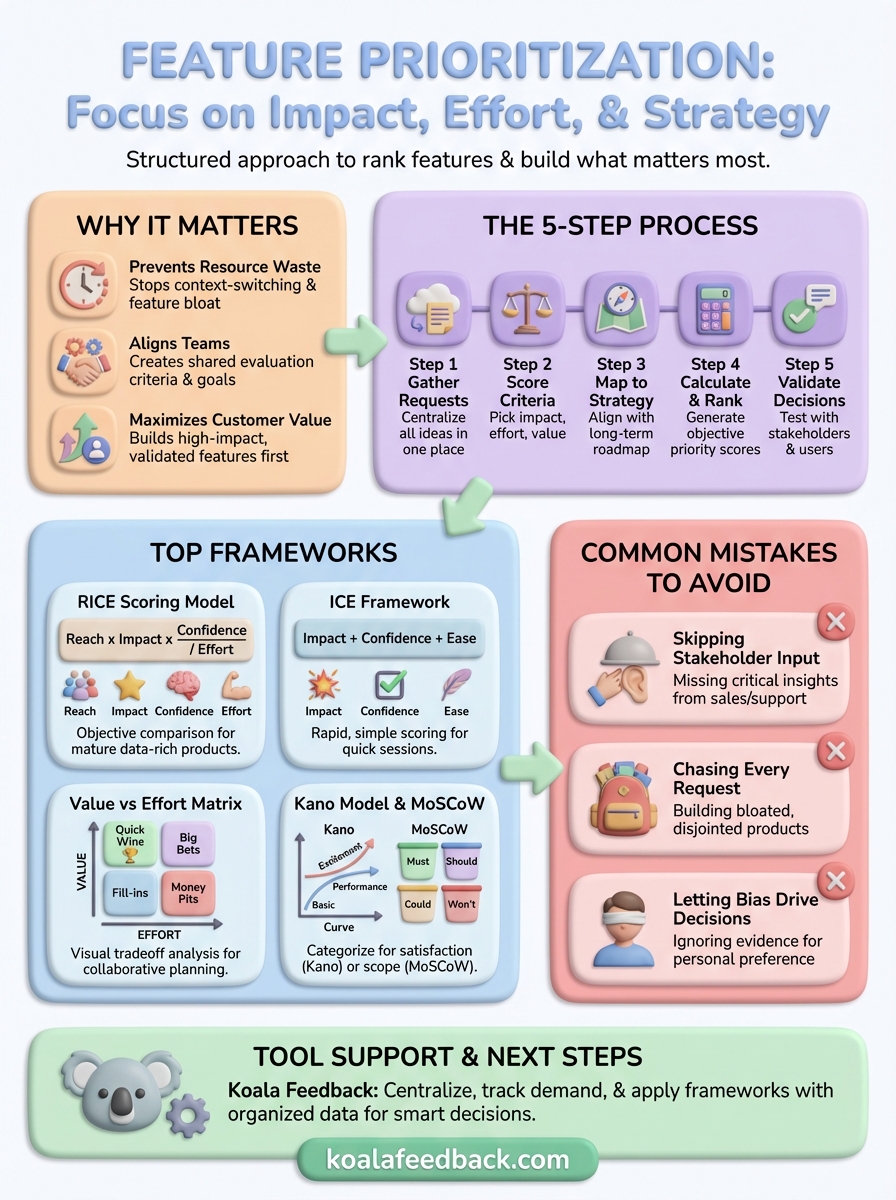

Feature prioritization is how you decide which features to build first. Instead of tackling everything at once or following the loudest voice in the room, you use a structured approach to rank features based on impact, effort, and strategic value. This process helps you focus your team's time and budget on what will move the needle for your users and your business.

Most product teams struggle with this. You have dozens of feature requests piling up from customers, sales, support, and stakeholders. Everyone thinks their idea deserves top priority. Without a clear method to evaluate and rank these requests, you end up building the wrong things or spreading yourself too thin. This article shows you how to prioritize features effectively. You'll learn why prioritization matters, walk through the process step by step, and explore proven frameworks like RICE, ICE, and the Value vs Effort matrix. We'll also cover real examples, common mistakes to dodge, and how to pick the right approach for your team.

You can't build everything. Your team has limited time, budget, and people, so every feature you choose to develop means saying no to something else. Without a clear prioritization process, you end up making decisions based on whoever complains the loudest or which idea sounds most exciting in the moment. This wastes resources and builds features that users don't actually need or want.

When you skip prioritization, your team spreads itself thin across too many projects at once. Developers context-switch constantly, deadlines slip, and nothing ships on time. You might spend months building a feature that only three customers asked for while ignoring the pain point affecting hundreds of users. Understanding what is feature prioritization helps you stop this cycle by creating a systematic way to evaluate each request before committing resources to it.

Prioritization ensures you invest your resources where they'll generate the biggest return, not where they'll generate the most meetings.

Different departments push for different features. Sales wants tools to close deals faster. Support wants features that reduce ticket volume. Marketing wants capabilities that look good in demos. Without prioritization, these competing demands create internal conflict and a disjointed product. A structured approach gives everyone shared criteria for evaluation, so decisions feel fair and transparent rather than political.

Your users don't care about your internal debates. They care about solving their problems quickly and efficiently. Prioritization forces you to focus on features that deliver measurable value to the most users. You build what matters instead of what's easy or interesting. This leads to higher satisfaction, better retention, and stronger word-of-mouth growth. When you nail prioritization, you ship features that users actually adopt and recommend to others.

You need a repeatable process that turns a messy pile of feature ideas into a ranked list you can act on. This approach works whether you're evaluating five features or fifty. The key is consistency, so every feature gets judged by the same standards instead of gut feeling or internal politics. Here's how to do it.

Start by collecting every feature request into a single source of truth. Pull in ideas from customer support tickets, sales calls, user interviews, analytics data, and stakeholder meetings. You can't prioritize what you can't see. Create a spreadsheet or use a feedback management tool to track each request with details like who asked for it, when, and why they need it. This visibility prevents good ideas from getting lost in Slack threads or email chains.

Pick two to four criteria that matter most to your business right now. Common options include customer impact, business value, development effort, and strategic fit. Assign each feature a numerical score for every criterion using a consistent scale like 1 to 10 or 1 to 5. Don't overthink this part. Your first estimate is usually close enough, and you can refine scores as you learn more.

Scoring forces you to think critically about each feature instead of relying on which idea sounds best in the moment.

Check how each feature connects to your product roadmap and company objectives. A feature might score well on customer demand but conflict with your long-term vision. For example, if you're moving upmarket to enterprise customers, features that only benefit small teams might rank lower even if lots of people want them. This step ensures what is feature prioritization delivers in practice matches where you want the product to go.

Use your scoring system to generate a final priority score for each feature. Simple frameworks multiply or add your criteria scores together. More complex ones apply weighted values to emphasize what matters most. The math gives you an objective ranking that's easier to defend than "I think we should build this because I like it."

Before you commit to building anything, test your prioritization decisions with the people who matter. Share your ranked list with key stakeholders to spot any major blind spots you missed. Then validate high-priority features with actual users through interviews, surveys, or prototype testing. You want confirmation that users will adopt the feature once you ship it, not just that they said they wanted it six months ago.

Different teams need different approaches to prioritization based on their product stage, team size, and decision-making culture. Some frameworks work better for early-stage startups with limited data, while others shine when you have mature products with rich analytics. The frameworks below give you proven structures to evaluate features consistently. Each one uses different criteria and calculations, so you can pick the approach that fits your constraints and goals.

This framework scores features across four dimensions to generate a single priority score. Reach measures how many users the feature will affect in a given time period. Impact rates how much the feature will improve the experience for those users, typically on a scale from minimal to massive. Confidence reflects how sure you are about your reach and impact estimates, expressed as a percentage. Effort captures the total work required from all team members, usually measured in person-weeks or person-months.

You calculate the RICE score by multiplying Reach, Impact, and Confidence, then dividing by Effort. A feature that reaches 1,000 users per quarter with high impact (3 out of 3), 80% confidence, and 4 person-weeks of effort would score (1,000 × 3 × 0.8) ÷ 4 = 600. Higher scores indicate higher priority. This framework excels when you need objective comparison across very different feature types because it accounts for both upside potential and resource cost.

ICE simplifies prioritization to three quick scores that most teams can estimate without extensive research. Impact measures the potential positive effect on your goal metric. Confidence captures how certain you are that the feature will deliver the expected impact. Ease represents how simple the feature is to implement relative to other options.

Score each dimension on a scale from 1 to 10, then average the three scores together. The simplicity makes ICE perfect for rapid prioritization sessions when you need to rank dozens of features quickly. What is feature prioritization really asking for is a practical method you can apply consistently, and ICE delivers exactly that without requiring detailed data or complex calculations.

ICE works best when you need to move fast and accept some subjectivity in exchange for speed.

This visual framework plots features on a two-by-two grid where one axis represents value to users or the business and the other represents implementation effort. You create four quadrants: high value with low effort (quick wins), high value with high effort (big bets), low value with low effort (fill-ins), and low value with high effort (money pits). Quick wins go first because they deliver maximum return for minimal investment.

The matrix makes prioritization discussions easier because stakeholders can see the tradeoffs immediately. You typically score value and effort on a simple 1-to-5 or 1-to-10 scale, though some teams use more granular scoring. This approach works particularly well in collaborative settings where visual tools help align different perspectives quickly.

This framework categorizes features into three types based on customer satisfaction. Basic features are expected fundamentals that cause dissatisfaction when missing but don't increase satisfaction when present. Performance features create proportional satisfaction, more is better and customers notice improvements. Excitement features delight users beyond expectations and create disproportionate satisfaction, but their absence doesn't cause dissatisfaction.

Understanding these categories helps you balance your roadmap intelligently. You need enough basic features to meet baseline expectations, performance features to compete effectively, and excitement features to differentiate your product. The Kano model reminds you that not all features contribute to satisfaction in the same way, so raw popularity scores can mislead.

MoSCoW sorts features into four buckets without numerical scoring. Must-have features are non-negotiable for the release to succeed. Should-have features are important but not critical, the release can work without them. Could-have features are nice additions you'll include if time and resources allow. Won't-have features are explicitly out of scope for this release, though you might revisit them later.

This method excels for scope management in time-boxed projects where you need clear boundaries. Sales teams and executives often find it easier to understand than numerical frameworks because the categories map to natural language decisions. You avoid the false precision of scoring systems while still creating a clear, defensible priority order.

A matrix only works if you apply it consistently and make decisions based on the results. The process requires more than just picking a framework and scoring features once. You need to establish clear evaluation criteria, involve the right people, and create a rhythm for reviewing priorities as conditions change. The practical application determines whether your prioritization efforts actually shape what gets built or just become another planning exercise that everyone ignores.

Define exactly what each score means before you start evaluating features. If you're using a 1-to-5 scale for effort, document what qualifies as a 1 versus a 5 in terms your whole team understands. A score of 1 might mean one developer can finish the feature in a single sprint, while 5 might require multiple team members over several months. Create similar definitions for every dimension you're scoring. This prevents the common problem where different people interpret the same scale differently, turning what is feature prioritization into a debate about scoring semantics rather than actual priorities.

Schedule a dedicated session where product managers, engineers, designers, and other key stakeholders review features together. Present each feature request with context about who wants it and why. Then score it across your chosen dimensions as a group. Engineers provide the best estimates for technical effort. Product managers contribute insights about customer impact and business value. The collaborative approach surfaces blind spots that individual scoring would miss.

Team-based scoring reduces bias and creates shared ownership of prioritization decisions.

Track your scores in a simple spreadsheet or table that everyone can access. You don't need fancy software to make this work. The transparency matters more than the tool.

Take your scores and map them visually onto your matrix or calculate final priority scores depending on your framework. The visualization makes patterns obvious that numbers alone might hide. You'll quickly spot clusters of quick wins you can knock out together or realize that several high-priority features depend on the same infrastructure work. Review the results with stakeholders to check if the ranking makes intuitive sense based on what they know about your product strategy and market position. When the data contradicts strong instincts, dig deeper to understand why rather than dismissing either the scores or the gut feeling.

Real prioritization decisions reveal how frameworks work in practice and what tradeoffs teams actually make. These examples show different contexts and constraints that shape how you apply what is feature prioritization to solve specific problems. Each scenario demonstrates how the same feature request can rank differently depending on your product stage, business model, and strategic goals. You'll see teams using frameworks to handle common situations like competing stakeholder demands, technical debt, and resource limitations.

A project management SaaS receives three major feature requests with strong customer demand. The first is advanced reporting that would let users create custom dashboards. The second is Slack integration for real-time notifications. The third is API rate limit increases for enterprise customers. Using the RICE framework, the team scores each feature. Slack integration reaches 5,000 users per month with high impact (3), strong confidence (90%), and moderate effort (6 person-weeks), producing a score of 2,250. Custom reporting reaches 2,000 users with massive impact (5), medium confidence (60%), and heavy effort (12 person-weeks), scoring 500. API improvements reach only 50 enterprise accounts but with critical impact (5), full confidence (100%), and minimal effort (2 person-weeks), scoring 125.

The team chooses Slack integration first despite custom reporting having higher total reach and impact. The framework reveals that integration delivers substantial value with manageable effort, making it the optimal use of limited development capacity. They schedule API improvements for the next sprint because enterprise retention matters strategically, even though the raw numbers look small. Custom reporting moves to the following quarter when they'll have more engineering bandwidth for complex features. This decision prevents the team from committing six months to reporting while losing ground on quicker improvements that users can adopt immediately.

Prioritization frameworks turn subjective debates about which feature matters most into objective conversations about return on investment and strategic fit.

A fitness tracking app needs to decide between four requested features using the Value vs Effort matrix. Users want social sharing to compete with friends, offline workout tracking when the network drops, personalized meal plans, and Apple Watch integration. The product team plots each feature. Social sharing lands in the high value, low effort quadrant as a clear quick win since the team already built sharing infrastructure for another feature. Offline tracking sits in high value, high effort territory because network reliability directly affects user retention but requires significant architecture changes.

Meal plans fall into low value, high effort because only 15% of users requested it and implementation requires nutrition expertise the team lacks. Apple Watch integration scores medium value and medium effort since it would serve the growing wearables market but needs specialized platform knowledge. The team builds social sharing first to capture immediate wins, then tackles offline tracking because retention data shows users churning after network failures during workouts. They delay meal plans indefinitely and explore partnership options for Watch integration rather than building it in-house. This sequencing lets them ship two features in the time they might have spent on just one, and both features address validated user pain points instead of speculative demands.

An enterprise software company debates whether to refactor legacy code or build new customer-requested features. Their technical debt causes slow performance and makes new development increasingly expensive. Using the Kano model, they categorize requests. Database optimization is a basic feature because customers expect reasonable speed, and current performance falls below that threshold. Advanced analytics is a performance feature that would differentiate them competitively. AI-powered recommendations qualify as an excitement feature that could surprise customers.

Engineers estimate the refactoring work at eight weeks but predict it would reduce future feature development time by 40%. The team prioritizes database work first because it addresses the basic expectation gap causing customer complaints. They bundle it with one quick analytics enhancement to show visible progress to customers. This combination satisfies immediate performance needs while demonstrating forward momentum on requested capabilities, and it creates the technical foundation that makes the excitement features feasible to build later without massive effort.

You can undermine even the best prioritization framework by falling into common traps that distort your judgment and waste resources. These mistakes happen in every product team, but you can spot and correct them once you know what to watch for. Understanding what is feature prioritization means also recognizing the patterns that derail good decision-making so you can build defenses against them before they damage your roadmap.

Product managers who prioritize features in isolation miss critical information that other teams possess. Engineers understand technical constraints and dependencies you might overlook. Sales teams know which features close deals and which ones customers forget about after the first demo. Support teams see patterns in user frustration that analytics data won't reveal. When you exclude these voices, you build an incomplete picture that leads to poor priority decisions.

Fix this by scheduling regular prioritization sessions that include representatives from engineering, sales, support, and other key functions. Share your scoring criteria ahead of time so participants come prepared with relevant insights. Create space for healthy debate about assumptions rather than treating prioritization as a solo exercise you present as finished work.

Customers tell you what they want, but they don't always know what they need or how to solve their underlying problems. Building every requested feature turns your product into a bloated mess that tries to please everyone and satisfies no one. You end up with dozens of shallow capabilities instead of core features that deliver real value.

Customer feedback matters enormously, but raw feature requests require translation into actual user needs before they belong in your prioritization process.

Validate each request by digging into the root problem driving the ask. Sometimes users request complex features when simple workflow improvements would solve their issue faster. Other times, multiple seemingly different requests point to the same underlying pain point that one well-designed feature could address completely.

Your personal preferences, recent conversations, and emotional attachments to certain features cloud objective prioritization. You might overvalue features you find personally exciting while underestimating ones that solve boring but critical problems. This bias becomes especially dangerous when executives or influential customers push for specific features, creating pressure to inflate scores artificially.

Combat bias by requiring evidence for every score you assign. Document the data, research, or customer quotes that support your impact estimates. When scores seem suspiciously aligned with personal preferences or political pressure, challenge yourself to find contradicting evidence before finalizing the priority ranking.

No single framework works perfectly for every team. Your product stage, team size, and available data determine which approach delivers the best results. A startup with three people and limited analytics needs different tools than an established company with millions of users and rich behavioral data. Understanding what is feature prioritization requires matching the framework to your specific constraints and decision-making style rather than blindly copying what works for other companies. The wrong framework creates friction that makes prioritization feel harder than it should be.

Early-stage products need simple, fast frameworks that don't depend on extensive historical data. ICE works well here because you can score features based on educated estimates and market research without needing usage metrics from thousands of customers. Your confidence scores will reflect the uncertainty that naturally comes with building something new.

Mature products benefit from data-rich frameworks like RICE that incorporate actual user reach numbers and validated impact predictions. You have the analytics infrastructure to measure how many users encounter specific workflows and the historical data to predict how feature changes affect key metrics. This foundation lets you make prioritization decisions with significantly higher confidence than teams working from assumptions alone.

Choose frameworks that match your current data availability rather than aspirational data you wish you had.

Small teams under ten people need frameworks they can apply in quick collaborative sessions without extensive preparation. Value vs Effort matrices work particularly well because you can sketch them on a whiteboard during sprint planning and reach consensus within an hour. The visual nature helps everyone understand the tradeoffs immediately without mathematical calculations.

Larger organizations with multiple stakeholders require frameworks that create transparent, defensible decisions across departments. RICE and MoSCoW provide the structure needed to align engineering, sales, support, and executive teams around shared priority criteria. These frameworks produce documentation that explains why specific features ranked higher than others, reducing the political debates that waste time in bigger companies. You'll spend more time on the initial scoring process, but you'll save weeks of circular discussions about whose features matter most.

Prioritization frameworks only work when you have clean, organized data to feed into them. Koala Feedback handles the messy groundwork of collecting, organizing, and measuring feature requests so you can focus on making smart decisions instead of hunting for scattered feedback across email, support tickets, and Slack channels. The platform turns chaotic input into structured information that any prioritization framework can use effectively.

You need every feature request in one accessible place before you can prioritize anything. Koala Feedback creates a single portal where customers, internal teams, and stakeholders submit ideas directly. The system automatically deduplicates similar requests and categorizes them based on your product areas, so you don't waste time manually sorting through hundreds of submissions to find patterns. This centralization eliminates the common problem where engineers build features based on recent conversations while ignoring validated demand from months of accumulated feedback.

When feedback lives in one system instead of scattered across departments, you see the full picture of what users actually need rather than what the loudest voice requested yesterday.

Raw submission counts mislead because they don't reflect actual user intensity or breadth. Koala Feedback lets users vote on feature requests and add comments explaining their specific use cases. These votes give you quantifiable data for the reach and impact dimensions that frameworks like RICE require. You can see which features have passionate support from a small group versus broad interest across your entire user base, helping you understand whether what is feature prioritization asking you to build will serve the right audience segments.

Koala Feedback structures your feedback collection to support any prioritization method you prefer. The platform organizes requests into logical boards that map to your product areas, making it easier to score features within context rather than comparing unrelated capabilities. You can export feedback data with vote counts, user segments, and categorization to plug directly into your scoring spreadsheets or matrices. The public roadmap feature then lets you communicate your prioritization decisions transparently, showing users which features moved from planned to in progress to completed based on the framework you applied.

You now understand what is feature prioritization and how to apply it effectively. The frameworks we covered give you structured methods to evaluate features based on impact, effort, and strategic value instead of gut feelings or political pressure. Start by choosing a framework that matches your team size and available data, then establish clear scoring criteria that everyone understands. Gather feedback systematically, score features collaboratively, and validate your priorities before committing resources to development.

Prioritization only works when you act on it consistently. Review your rankings regularly as market conditions and user needs shift, and communicate your decisions transparently so stakeholders understand the tradeoffs. The right tools make this process dramatically easier by centralizing feedback and tracking demand automatically. Try Koala Feedback to collect feature requests, organize priorities, and share your roadmap with users in one platform designed specifically for product teams that want to build what matters most.

Start today and have your feedback portal up and running in minutes.